Beyond Tweaks: The Real Deal with A/B Testing

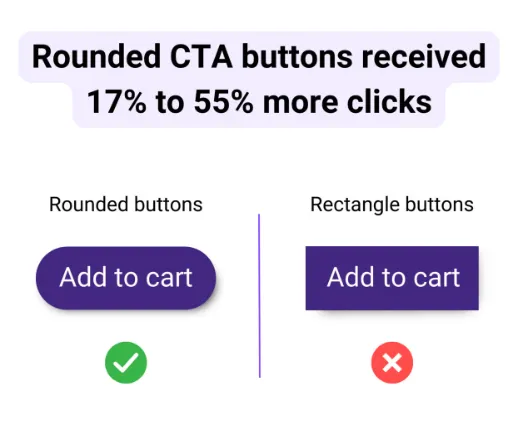

A recent study claims, “Curved CTA buttons received 17% to 55% higher clicks (CTR) than buttons with sharp angles” (Research date: December 2023, University of South Florida, University of Tennessee & Neighborly).

At first glance, it states that you will receive up to 55% more clicks if you change your button radius! What an impactful and easy-to-implement idea to make any business more successful. I already see backlog requests to change the button radius across the world.

We are not talking about a marketing influencer on LinkedIn here; it comes from a serious scientific paper reviewed by peers and published in a scientific journal.

Is it really possible? Are simple tweaks all you need to increase your website's CTR (click-through rate)?

When it sounds too good to be true, it probably is.

Will rounded corners on a button impact your business results? Maybe (if I may be optimistic). The problem is not the result but the A/B testing methodology, which we will question.

To better understand, let’s learn about A/B testing, how it works, and its main benefits. After all, A/B testing is the most popular method for making data-driven business decisions among tech giants.

But is it the right approach for you? Can you make business decisions based on the results of those tests? How easy is it to implement for your business?

Is it, again, too good to be true?

Let’s dive in. But first, what is A/B testing?

A/B Testing, simply explain

A/B testing, or controlled experiments, is the direct descendant of academic and scientific ways to test new ideas. This time, it is applied to the business environment.

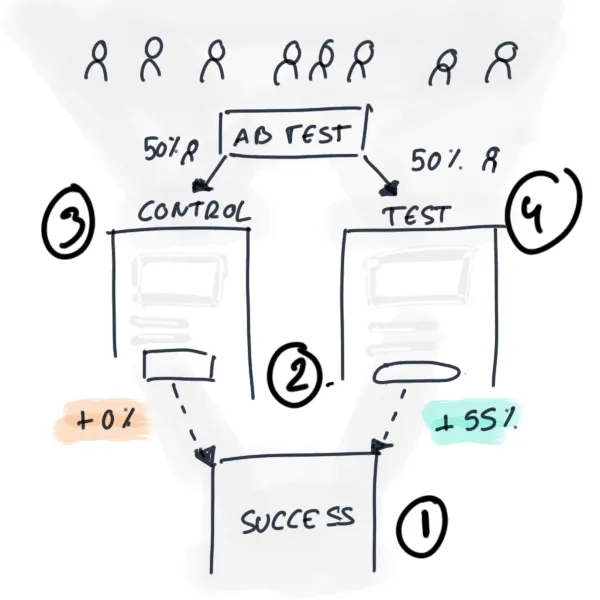

It involves four components:

- A Goal: What you want to achieve (e.g., higher Success page view).

- A Change: The modification you’re testing (e.g., button design).

- A Control version: The original version.

- A Test version: The modified version.

You’re testing a change on your test group to see if it significantly impacts the goal compared to the control group.

You have successful experiment results if you reach your goal more often with the test.

If not, you change your hypothesis and try again…

Why do Tech Companies love A/B tests?

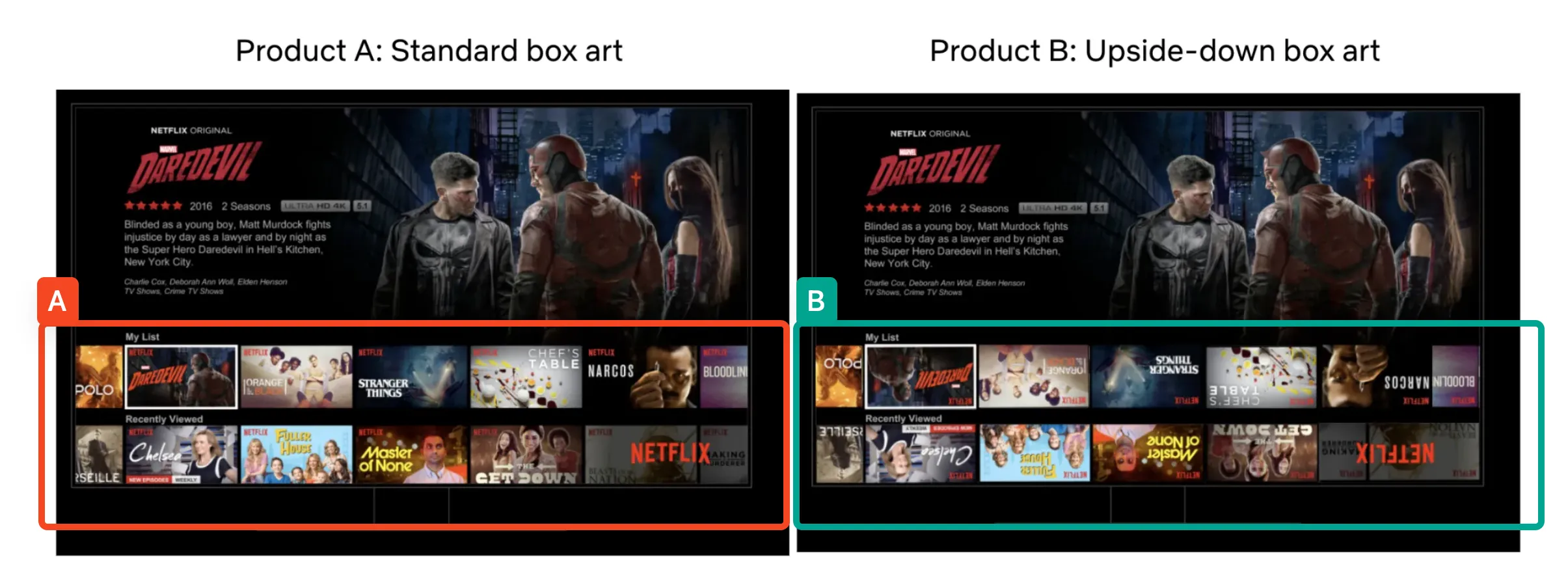

You have probably seen it on social media: Companies like Google increase the clicks on their button by XX% after changing the color. This is not a lie. Companies like Meta, Google, and Microsoft run more than 10,000 A/B tests per year to improve their digital products. It is even known that A/B tests are the only way a feature makes it to production.

Why? Here are the 4 main reasons I hear the most:

1) It’s the most trusted way to make decisions.

A/B testing is the most trusted method of collecting user insights to make business decisions.

Since A/B tests happen without users’ knowledge, they are not biased in their behaviors. They act and behave “in real life” instead of when interviewed “in a lab” where social desirability will impact results. Users don’t try to please the interviewer or fear judgment. They are their true selves with “in real life” tests like A/B testing.

2) It is the most scientific approach to making business decisions.

A/B testing is seen as the scientific way to solve business problems. It directly replicates the scientific method and copies how scientists have experimented with new ideas over the past 100 years.

Netflix uses the scientific method to inform decision making. We form hypotheses, gather empirical data, including from experiments, that provide evidence for or against our hypotheses, and then make conclusions and generate new hypotheses. Neflix team on medium

Thanks to A/B testing, managers make rational decisions based on data. No more the Highest Paid Person Opinion (HiPPO) instincts or the last recommendations from the sales team.

The product team can ship whatever they want if it impacts business results during a test.

3) It leverages data, and tech companies love data.

Data used to take time to collect and understand. If you were a data scientist 50 years ago, the pain of collecting data from 10 thousand people would have lasted a month or year.

In our digital world, tech companies have access to much data. Running a valid statistical experiment with billions of users can take days or weeks.

4) It’s easy to get approval and consensus

It’s straightforward to start and easy to get stakeholder buy-in. Who can position itself against science-based and data-driven decisions?

Now, there are some conditions to the above. If you are a tech giant with unlimited access to data and users and an experimentation system in place, A/B testing is the answer to most of your product decisions.

However, if you check one of the following:

- Less than 100,000 visitors a month

- An expensive cost to acquire new users

- No internal culture of experimentation

- Urgency to grow your business

Then, A/B testing is not for you, and you might even negatively impact your business…

The Problem with A/B Testing

A/B testing results feel good. You look at the results and reach a consensus in the room in seconds. You might like the results or not, but you will decide and move on quickly. After all, the results are precise, and we all trust science, right?

As we mentioned earlier, it’s complicated. Here, I will share the main limitation I learned in the past years:

It is limited to a specific context

A/B testing only tests specific contexts. These contexts are relatively narrow and only give insights into a specific controlled environment you set.

For example, you come up with the great idea of testing the button radius for the CTR on page. The goal of your test will be to monitor the version that generates the most clicks on a button.

However, you cannot foresee the impact of this change down the user journey. Maybe the user converts easier, but they might leave faster. Who knows?

One way to overcome the narrow focus of A/B testing is to use Overall evaluation criterion. Overall evaluation criterion (OEC) is a combination of metrics to detect improvements over a short period while being a good predictor of long-term goals.

For most businesses, OEC is a complex process that is difficult to measure. Trying to mitigate the context will add more complexity and time to our decision-making process.

Clicks won’t impact your business results.

You decided to move forward and continue to test the button radius

The results came back, and it improved the CTR by 15%, congratulations.

Now what? You have more people clicking on a button. Does it drive more revenue for your company? Does it increase customer lifetime value? A/B testing only applies to a specific context, and it’s hard to demonstrate its business impact.

Plus, A/B testing is better suited for small, incremental changes. For small businesses, those micro-improvements make us feel good but are irrelevant to your business growth. You have more clicks, so what?

Finally, another limitation I found is that the results of A/B testing don’t add up. If you run experiments after experiments, you might optimize conversion by 5% here and 7% there. At the end of the year, your business won’t grow by 13% thanks to the curve and color of a button.

I understand that it feels good to have data-backed and measurable improvement, but it’s irrelevant. It only takes more of your precious time.

A/B testing takes time

If you are not a tech giant, A/B testing will take most of your focus and delay your business decisions.

Imagine you have 10,000 visitors to your blog post and want to test a new headline. It will take you two months (if you are lucky) to get significant results and another two days to implement the changes. By then, most of your users would have seen the blog post, and your test would not be relevant anymore.

Was it worth the test?

Does a 10% uplift in clicks on a blog post worth the time and effort you put in?

If you still think it’s worth it, please consider your luck and remember that only 1/10 experiments are successful.

“Only 8% of the experiment run had an impact on revenue. 92% were just failing. We are talking here of one of the most innovative, user-centric team and still 92% of their best ideas failed”. Ronny Kohavi talking about Airbnb search team.

And among the 8% success rate, if you get a statistically significant result with a “P-value” less than 0.05, the teams at Airbnb realized they had a 26% chance that the result was a false positive.

If only 8% success rate and among that, a high chance of false positive, can you still justify going through this pain?

Every slight improvement is worth the effort for large groups of users, above 100,000 users/month; I get it. But for most businesses I know and work with, it’s definitely not worth the time and effort.

A/B testing is all about trust.

When I worked at Nestle, I ran A/B tests almost every day. One day, I made a mistake, and my control version was similar to my challenger version. After two weeks of testing, the results came back, and my challenger was the winning version by 3% uplift. Again, my two versions were identical! My results were statistically valid. It made no sense; I should get the same results!

After some research, I realized I had made an A/A test. Based on the results, I cannot trust my testing infrastructure anymore.

Trust and reliability are not given when running A/B testing.

When running tests, you need to trust the technical platform. The best way to control the platform is to run an A/A test. You run tests with exactly the same version to see if you can trust your technical setup. If the results are unequal, something is wrong with your platform, and it won’t be trustworthy moving forward.

A/B testing is good, but it wastes time and money if you don’t have the volume of users and the infrastructure.

Moving from A/B testing to business experimentation.

A/B testing ≠ Experimentation.

A/B testing is one way to experiment, but not the only way.

For small businesses and startups, every decision matters. As mentioned above, you shouldn’t spend time changing buttons; instead, focus on more significant changes like product offers or signup options.

A/B testing is excellent for button tweaks, but it’s not the right tool for testing new features and understanding how people feel about them.

By putting A/B testing aside, you can aim for higher-impact experiments and maximize your business influence.

Finding the right experiment to make better business decisions?

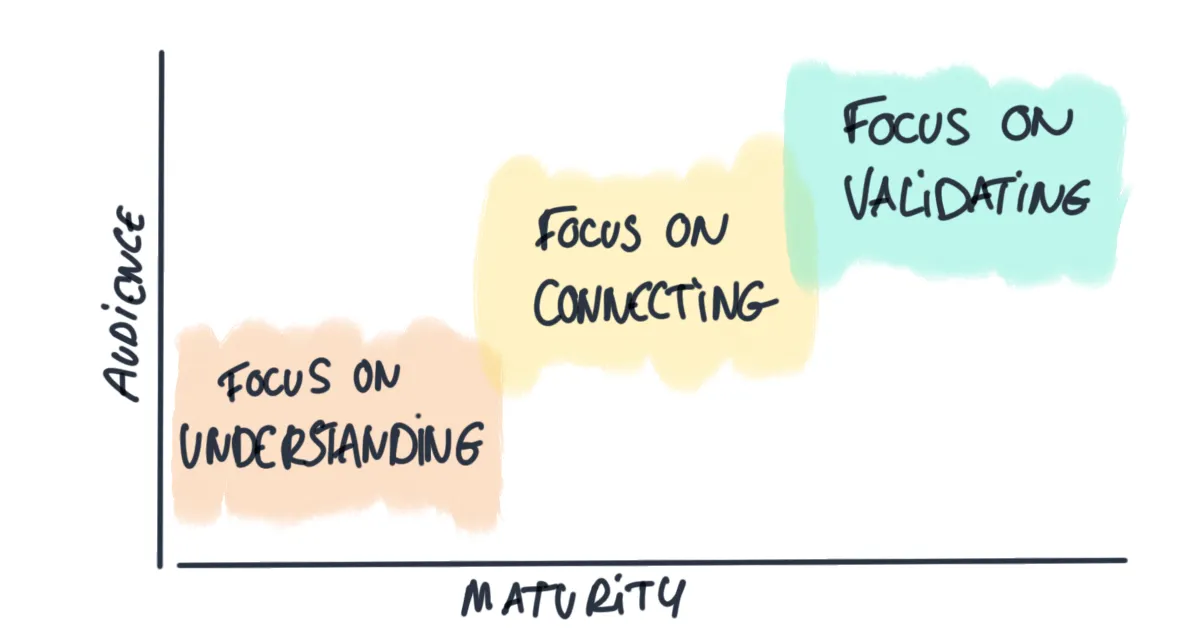

Depending on your business maturity and your access to an audience, you want to find the right tool to get the right insights.

In every business lifecycle, there are at least 3 phases you need to consider.

Low maturity, low audience

We all need to start somewhere, right?

You focus on direct “in-lab” insight from users, mostly through interviews. You want to understand their desires and fears, what drives them forward, and what they want to achieve.

At that stage, who cares about the color of your button?

Middle maturity, middle access

You gained some traction, congratulation. Now what?

You want to look for patterns in your users' insights. Talking to more users gives you perspective. It’s time to leverage the insights and see which ones stick the most.

At this stage, every conversation with user/customer can be clustered around some key elements of value. You want to make sure those findings connect with more than one person.

High maturity, high access

You made it big; it’s time to have fun with behavioral data.

Now that you have enough people on your product, you let the data talk.

Do you have a question? Set an experiment and validate (or not) your assumptions.

Now is the time to test interactions on the page, title length, and, of course, the radius of buttons.

Moving from data-driven to user-driven.

As a business owner, marketer, or product person, your job is to help your users reach their goals. To do so, you collect and analyze insights to make better product and business decisions.

There is nothing wrong with being data-driven. The problem is putting too much importance on data without context, and A/B testing encourages this. Instead of waiting for test results to make decisions, look for other ways to collect user insights and make decisions faster.

A/B testing is only relevant if you have a LOT of users. Otherwise, it’s a waste of time and money.

Moving from data to user-driven means you rely on more than numbers to make decisions. You believe data is crucial, but you are not afraid to add some context before deciding.

It also means that if the data says A, you dare to ask your users why.

Finally, it means that you don’t believe there is a universal truth about the color of a button, and you accept that.

Conclusion

A/B testing is reassuring; it makes us feel rational and in control. We love to have rational results to push our ideas and support our decisions among our peers.

However, we saw that using A/B testing alone is a bad idea. It will delay your decision, cost you money, and give you unreliable results.

While A/B testing is popular, it’s not always the best approach. Especially for startups and small businesses, gaining user insights through different experimentation methods can be more effective.

There are plenty of experiments and tools to help you be more user-driven and make better business decisions. Depending on your idea's maturity, goal, and business context, choosing the right experience will help you gain user insights and maximize your business impact.

Far more than the A/B testing radius of every button.

References

- The Case Against A/B Testing (yes, I know it sounds crazy)

- GoodUI.org for A/B testing success patterns

- Posthog learnings about AB tests

- "The ultimate guide to A/B testing" | Ronny Kohavi